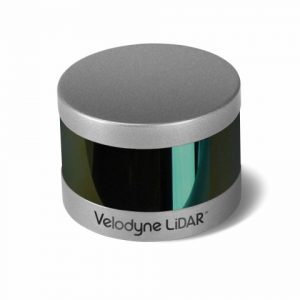

Velodyne LiDAR Announces Puck Hi-Res™ LiDAR Sensor

Velodyne LiDAR Announces Puck Hi-Res™ LiDAR Sensor, Offering Higher Resolution to Identify Objects at Greater Distances

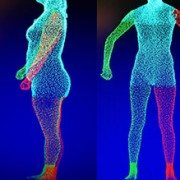

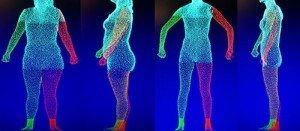

Industry-leading, real-time LiDAR sensor impacts autonomous vehicle, 3D mapping and surveillance industries with significantly higher resolution of 3D images

MORGAN HILL, Calif.–(BUSINESS WIRE)–Velodyne LiDAR Inc., the recognized global leader in Light, Detection and Ranging (LiDAR) technology, today unveiled its new Puck Hi-Res™ sensor, a version of the company’s groundbreaking LiDAR Puck that provides higher resolution in captured 3D images, which allows objects to be identified at greater distances. Puck Hi-Res is the third new LiDAR sensor released by the company this year, joining the standard VLP-16 Puck™ and the Puck LITE™.

“Not only does the Puck Hi-Res provide greater detail in longer ranges, but it retains all the functions of the original VLP-16 Puck that shook up these industries when it was introduced in September 2014.”

“Introducing a high-resolution LiDAR solution is essential to advancing any industry that leverages the capture of 3D images, from autonomous navigation to mapping to surveillance,” said Mike Jellen, President and COO, Velodyne LiDAR. “The Puck Hi-Res sensor will provide the most detailed 3D views possible from LiDAR, enabling widespread adoption of this technology while increasing safety and reliability.”

Expanding on Velodyne LiDAR’s groundbreaking VLP-16 Puck, a 16-channel, real-time 3D LiDAR sensor that weighs just 830 grams, Puck Hi-Res is used in applications that require greater resolution in the captured 3D image. Puck Hi-Res retains the VLP-16 Puck’s 360° horizontal field-of-view (FoV) and 100-meter range, but delivers a 20° vertical FoV for a tighter channel distribution – 1.33° between channels instead of 2.00° – to deliver greater details in the 3D image at longer ranges. This will enable the host system to not only detect, but also better discern, objects at these greater distances.

“Building on the VLP-16 Puck and the Puck LITE, the Puck Hi-Res was an intuitive next step for us, as the evolution of the various industries that rely on LiDAR showed the need for higher resolution 3D imaging,” said Wayne Seto, product line manager, Velodyne LiDAR. “Not only does the Puck Hi-Res provide greater detail in longer ranges, but it retains all the functions of the original VLP-16 Puck that shook up these industries when it was introduced in September 2014.”

“The 3D imaging market is expected to grow from $5.71B in 2015 to $15.15B in 2020, led by the development of autonomous shuttles for large campuses, airports, and basically anywhere there’s a need to safely move people and cargo,” said Dr. Rajender Thusu, Industry Principal for Sensors & Instruments, Frost & Sullivan. “We expect Velodyne LiDAR’s line of sensors to play a key role in this surge in autonomous vehicle development, as the company leads the way in partnerships with key industry drivers, along with the fact that sensors like the new Puck Hi-Res are substantially more sophisticated than competitive offerings and increasingly accessible to all industry players.”

Velodyne LiDAR is now accepting orders for Puck Hi-Res, with a lead-time of approximately eight weeks.

About Velodyne LiDAR

Founded in 1983 by David S. Hall, Velodyne Acoustics Inc. first disrupted the premium audio market through Hall’s patented invention of virtually distortion-less, servo-driven subwoofers. Hall subsequently leveraged his knowledge of robotics and 3D visualization systems to invent ground breaking sensor technology for self-driving cars and 3D mapping, introducing the HDL-64 Solid-State Hybrid LiDAR sensor in 2005. Since then, Velodyne LiDAR has emerged as the leading supplier of solid-state hybrid LiDAR sensor technology used in a variety of commercial applications including advanced automotive safety systems, autonomous driving, 3D mobile mapping, 3D aerial mapping and security. The compact, lightweight HDL-32E sensor is available for applications including UAVs, while the VLP-16 LiDAR Puck is a 16-channel LiDAR sensor that is both substantially smaller and dramatically less expensive than previous generation sensors. To read more about the technology, including white papers, visit http://www.velodynelidar.com.

Contacts

Velodyne LiDAR

Laurel Nissen

lnissen@velodyne.com

or

Porter Novelli/Voce

Andrew Hussey

Andrew.hussey@porternovelli.com