zLense Announces World’s First Real-Time 3D Depth Mapping Technology for Broadcast Cameras

New virtual production platform dramatically lowers the cost of visual effects (VFX) for live and recorded TV, enabling visual environments previously unattainable in a live studio without any special studio set-up…

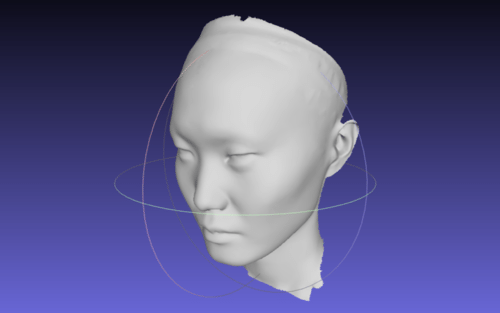

27 October 2014, London, UK – zLense, a specialist provider of virtual production platforms to the film, production, broadcast and gaming industries, today announced the launch of the world’s first depth-mapping camera solution that captures 3D data and scenery in real-time and adds a 3D layer, which is optimized for broadcasters and film productions, to the footage. The ground breaking industry-first technology processes space information, making new and real three-dimensional compositing methods possible, enabling production teams to create stunning 3D effects and utilise state-of-the-art CGI in live TV or pre-recorded transmissions – with no special studio set up.

Utilising the solution, directors can produce unique simulated and augmented reality worlds, generating and combining dynamic virtual reality (VR) and augmented (AR) effects in live studio or outside broadcast transmissions. The unique depth-sensing technology allows for a full 360 degree freedom of camera movement and gives presenters and anchormen greater liberty of performance. Directors can combine dolly, jib arm and handheld shots as presenters move within, interact with and control the virtual environment and, in the near future, using only natural gestures and motions.

“We’re poised to shake up the Virtual Studio world by putting affordable high-quality real-time CGI into the hands of broadcasters,” said Bruno Gyorgy, President of zLense. “This unique world-leading technology changes the face of TV broadcasting as we know it, giving producers and programme directors access to CGI tools and techniques that transform the audience viewing experience.”

Doing away with the need for expensive match-moving work, the zLense Virtual Production platform dramatically speeds up the 3D compositing process, making it possible for directors to mix CGI and live action shots in real-time pre-visualization and take the production values of their studio and OB live transmissions to a new level. The solution is quick to install, requires just a single operator, and is operable in almost any studio lighting.

“With minimal expense and no special studio modifications, local and regional TV channels can use this technology to enhance their news and weather graphics programmes – unleashing live augmented reality, interactive simulations and visualisations that make the delivery of infographics exciting, enticing and totally immersive for viewers,” he continued.

The zLense Virtual Production platform combines depth-sensing technology and image-processing in a standalone camera rig that captures the 3D scene and camera movement. The ‘matte box’ sensor unit, which can be mounted on almost any camera rig, removes the need for external tracking devices or markers, while the platform’s built-in rendering engine cuts the cost and complexity of using visual effects in live and pre-recorded TV productions. The zLense Virtual Production platform can be used alongside other, pre-existing, rendering engines, VR systems and tracking technologies.

The VFX real-time capabilities enabled by the zLense Virtual Production platform include:

- Volumetric effects

- Additional motion and depth blur

- Shadows and reflections to create convincing state-of-the-art visual appearances

- Dynamic relighting

- Realistic 3D distortions

- Creation of a fully interactive virtual environment with interactive physical particle simulation

- Wide shot and in-depth compositions with full body figures

- Real-time Z-map and 3D models of the picture

For more information on the zLense features and functionalities, please visit: zlense.com/features

About Zinemath

Zinemath, a leader in developing the re-invention of how professional moving images are going to be processed in the future, is the producer of zLense, a revolutionary real-time depth sensing and modelling platform that adds third dimensional information to the filming process. zLense is the first depth mapping camera accessory optimized for broadcasters and cinema previsualization. With an R&D center in Budapest, Zinemath, part of the Luxemburg-based Docler Group, is spreading this new vision to all industries in the film, television and mobile technology sectors.

For more information please visit: www.zlense.com

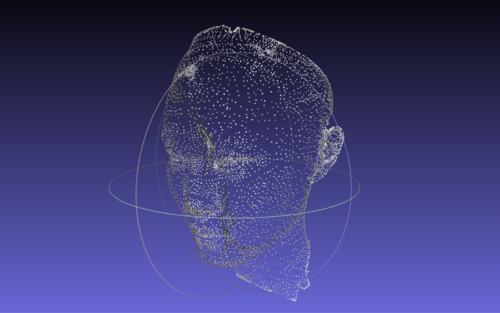

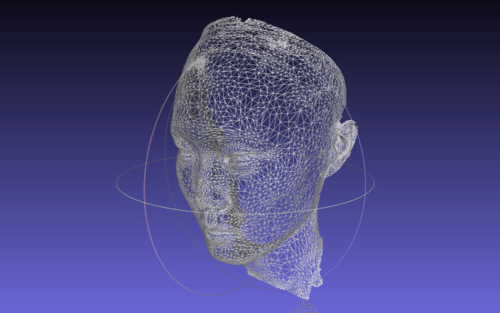

The tablet will apparently be able to scan an “advanced photogrammetric picture” with up to 4 million dots in around 2 minutes. It will also be able to capture 3D objects in motion. It’s using a blend of computer vision techniques, photogrammetry, visual odometer, “precision sensor fine tuning” and other image measuring techniques, say its makers.

The tablet will apparently be able to scan an “advanced photogrammetric picture” with up to 4 million dots in around 2 minutes. It will also be able to capture 3D objects in motion. It’s using a blend of computer vision techniques, photogrammetry, visual odometer, “precision sensor fine tuning” and other image measuring techniques, say its makers.