Film director and cinematographer Patryk Kizny – along with his talented team at LookyCreative – put together the 2010 short film “The Chapel” using motion controlled HDR time-lapse to achieve an interesting, hyper-real aesthetic. Enthusiastically received when released online, the three-minute piece pays tribute to a beautifully decaying church in a small Polish village built in the late 1700s. Though widely lauded, “The Chapel” felt incomplete to Kizny, so in fall of 2011, he began production on “Rebirth” to refine and add dimension to his initial story.

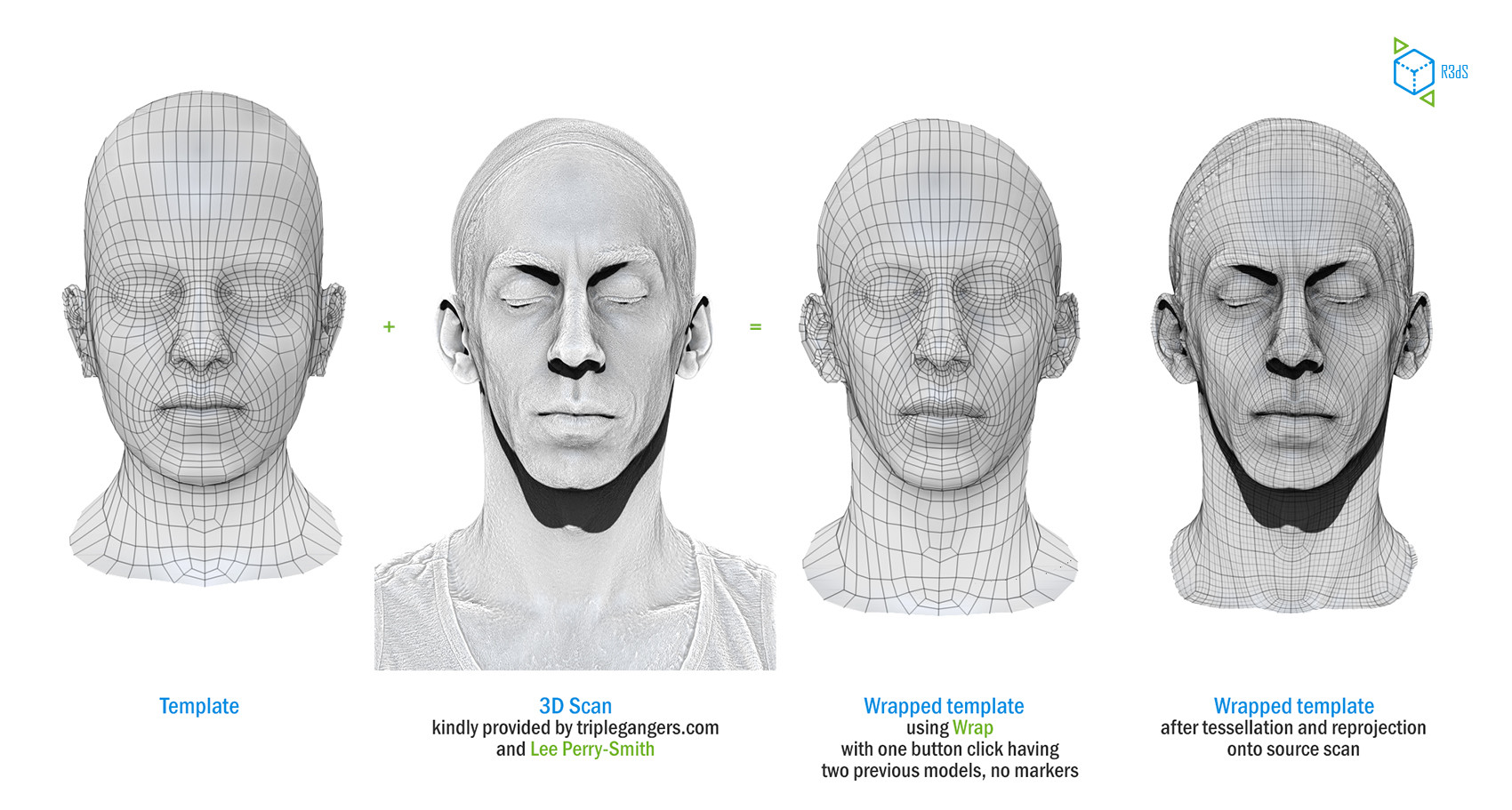

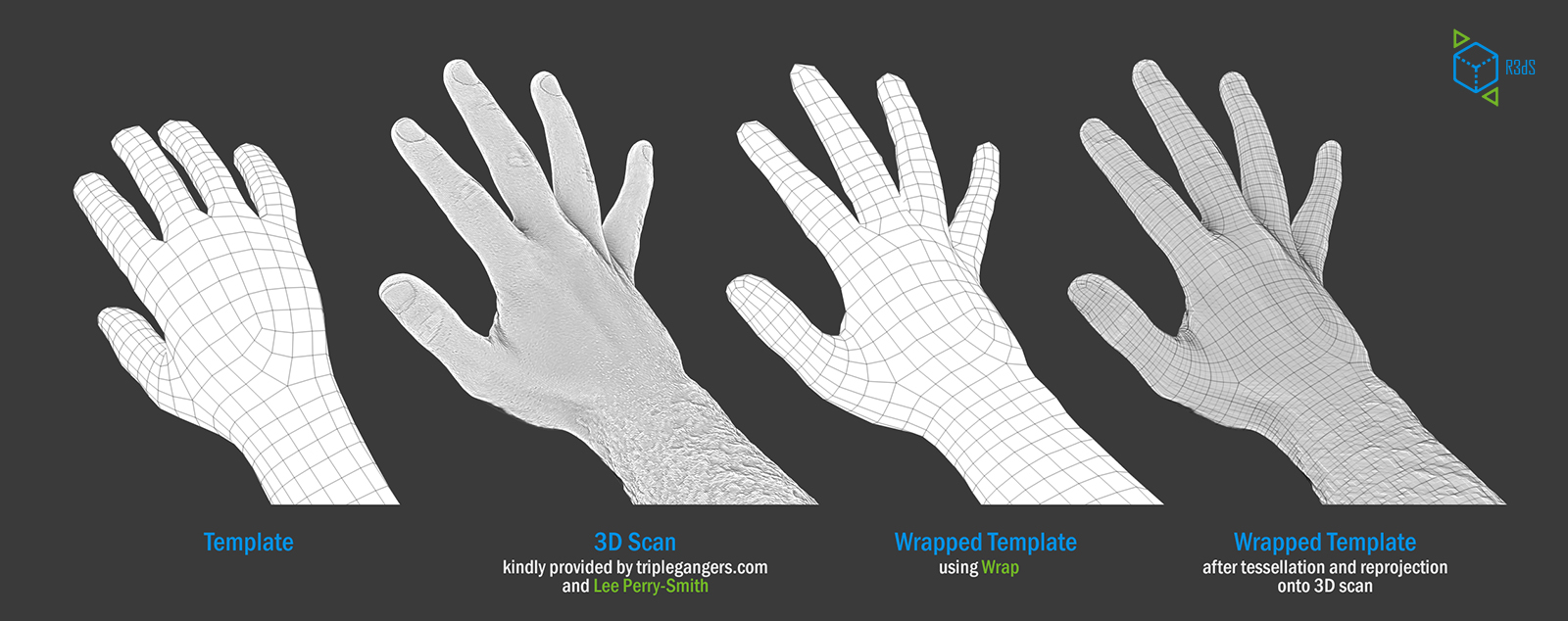

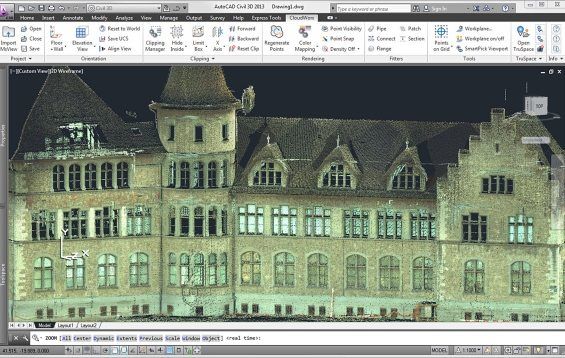

Exploring the same church, “Rebirth” comprises three separate scenes created using different visual techniques. Contemplative, philosophical narration and a custom orchestral soundtrack composed by Kizny’s collaborator, Mateusz Zdziebko, help guide the flow and overall aspirational tone of the film, which runs approximately 12 minutes. The first scene features a point cloud representation of the chapel with various pieces and cross-sections of the building appearing, changing and shifting to the music. Based on LIDAR scans taken of the chapel for this project, Kizny generated the point clouds with Thinkbox Software’s volumetric particle renderer, Krakatoa, in Autodesk 3ds Max.

“About a year after I shot ”The Chapel,” I returned to the location and happened to get involved in heritage preservation efforts,” Kizny explained. “At the time, laser scanning was used for things like archiving, set modeling and support for integrating VFX in post production, but I hadn’t seen any films visualizing point clouds themselves, so that’s what I decided to do.”

EKG Baukultur an Austrian/German company that specializes in digital heritage documentation and laser scanning, scanned the entire building in about a day from 25 different scanning positions. The collected data was then registered and processed – creating a dataset of about 500 million points. Roughly half of the collected data was used to create the visualizations.

Data processing was done in multiple stages using various software packages. Initially, the EKG Baukultur team registered the separate scans together in a common coordinates space using FARO Scene software. Using .PTS format, the data was then re-imported into Alice Labs Studio Clouds (acquired by Autodesk in 2011) for clean up. Kizny manually removed any tripods with cameras, people, checkerboards and balls that had been used to reference scans. Then, the data was processed in Geomagic Studio to reduce noise, fill holes and uniformly downsample selected areas of the dataset. Later, the data was exported back to the .PTS ASCII format with the help of MeshLab and processed using custom Python scripting so that it could be ingested using the Krakatoa importer. Lacking a visual effects background, Kizny initially tested a number of tools to find the best way to visualize point cloud data in a cinematic way with varying and largely disappointing results. Six months of extensive R&D led Kizny to Krakatoa, a tool that was astonishingly fast and a fraction of the price of similar software specifically designed for CAD/CAM applications.

“I had a very basic understanding of 3ds Max, and the Krakatoa environment was new to me. Once I began to figure out Krakatoa, it all clicked and the software proved amazing throughout each step of the process,” he said.

Even with mixing the depth of field and motion blur functions in Krakatoa, Kizny was able to keep his render time to roughly five to ten minutes per frame, even while rendering 200 million points in 2K, by using smaller apertures and camera passes from a higher distance.

“Krakatoa is an amazing manipulation toolkit for processing point cloud data, not only for what I’m doing here but also for recoloring, increasing density, projecting textures and relighting point clouds. I have tried virtually all major point cloud processing software, but Krakatoa saved my life on this project,” Kizny noted.

In addition to using Krakatoa to visualize all the CG components of “Rebirth” as well as render point clouds, Kizny also employed the software for advanced color manipulation. With two subsets of data – a master with good color representation and a target that lacked color information – Kizny used a Magma flow modifier and a comprehensive set of nodes to cast and spatially interpolate the color data from the master subset onto the target subset so that they blended seamlessly in the final dataset. Magma modifiers were also used for the color correction of the entire dataset prior to rendering, which allowed Kizny greater flexibility compared to trying to color correct the rendering itself. Using Krakatoa with Magma modifiers also provided Kizny with a comprehensive set of built-in nodes and scripting access.

The second scene of “Rebirth” is a time-lapse reminiscent of “The Chapel,” while the final scene shows live action footage of a dancer. Footage for each scene was captured using Canon DSLR cameras, a RED ONE camera and DitoGear motion control equipment. Between the second and third scene, a short transition visualizes the church collapsing, which was created using 3ds Max Particle Flow with help of Thinkbox Ember, a field manipulation toolkit, and Thinkbox Stoke, a particle reflow tool.

“In the transition, I’m trying to collapse a 200 million-point data cloud into smoke, then create the silhouette of a dancer as a light point from the ashes,” shared Kizny. “Even though it’s a short scene, I’m making use of a lot of technology. It’s not only rendering this point cloud data set again; it’s also collapsing it. I’m using the software in an atypical way, and Thinkbox has been incredibly helpful in troubleshooting the workflow so I could establish a solid pipeline.”

Collapsing the church proved to be a challenge for Kizny. Traditionally, when creating digital explosions, VFX artists are blowing up a solid, rigid object. Not only did Kizny need to collapse a point cloud – a daunting task in of itself – but he also had to do so in the hyper-realistic aesthetic he’d established, and in a way that would be both ethereal and physically believable. Using 3ds Max Particle Flow as a simulation environment, Kizny was able to generate a comprehensive vector field of high resolution that was more efficient and precisely controlled with Ember. Ember was also used to animate two angels appearing from the dust and smoke along with the dancer silhouette. The initial dataset of each of angels was pushed through a specific vector noise field that produced a smoke-like dissolve and then reversed thanks to retiming features in Krakatoa, Ember and Stoke, which was also used to add density.

“To create the smoke on the floor, I decided to go all the way with Thinkbox tools,” Kizny said. “All the smoke you see was created using Ember vector fields and simulated with Stoke. It was good and damn fast.”

Another obstacle was figuring out how to animate the dancer in the point clouds. Six cameras recorded a live performer with markerless motion capture tracking done using iPi Motion Capture Studio package. The data obtained from the dancer was then ported onto a virtual, rigged model in 3ds Max and used to emit particles for a Particle Flow simulation. Ember vector fields were used for all the smoke-like circulations and then everything was integrated and rendered using Thinkbox’s Deadline, a render management system, and Krakatoa – almost 900 frames and 3 TB of data caches only for particles. Deadline was also used to distribute high volume renders and allocate resources across Kizny’s render farm.

Though an innovative display of digitally artistry, “Rebirth” is also a preservation tool. Interest generated from “The Chapel” and continued with “Rebirth” has enticed a Polish foundation to begin restoration efforts on the run-down building. Additionally, the LIDAR scans of the chapel will be donated to CyArk, a non-profit dedicated to the digital preservation of cultural heritage sites, and made widely available online.

The film is currently securing funding to complete postproduction. Support the campaign and learn more about the project at the IndieGoGo campaign homepage at http://bit.ly/support-rebirth. For updates on the film’s progress, visit http://rebirth-film.com/.

About Thinkbox Software

Thinkbox Software provides creative solutions for visual artists in entertainment, engineering and design. Developer of high-volume particle renderer Krakatoa and render farm management software Deadline, the team of Thinkbox Software solves difficult production problems with intuitive, well-designed solutions and remarkable support. We create tools that help artists manage their jobs and empower them to create worlds and imagine new realities. Thinkbox was founded in 2010 by Chris Bond, founder of Frantic Films. http://www.thinkboxsoftware.com

Directable characters

Directable characters