Behind the Work: Garage Magazine Augmented Reality App

With New York Fashion Week FW15 in high gear, SCANable got in on the action with a dream assignment collaborating with The Mill, Garage Magazine, renowned makeup artist Pat McGrath, photographer Phil Poynter and Beats by Dre to bring February’s cover models to life through Garage’s smart phone app.

The Covers

Led by The Mill creative director Andreas Berner, the brief was to create five different covers, each featuring a supermodel wearing a colorful set of Beats by Dre headphones. Each model was treated with a pure CG interpretation of various elements inspired by original Pat McGrath’s make up designs: android mask, graphite scribbles, shrink wrap, crystals, and smoke elements.

Cara Delevingne: Android Mask

Cara’s look was inspired by Pat McGrath’s make-up for the Spring/Summer Alexander McQueen show. Once the app is activated, segments of blue armor animate from behind her head and create an android effect.

Kendall Jenner: Graphite Scribbles

Kendall is taken over by a mesh of stone 3D graphite that swoops over her whole body and face until it engulfs her in delicate body armor. The animation is the shape of her silhouette, creating the effect that both she and the headphones are immersed in a cage of lines.

Lara Stone: Shrink Wrap

Lara appears in airtight shrink-wrap plastic, creating a smooth and almost liquid looking cover. As Lara’s head emerges from the page, the rich color tone of the headphones begins to take over and envelope her completely back into the cover.

Binx Walton: Crystals

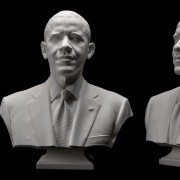

The animation appears in midair, organically building from crystals and facets to create a 3D crystal bust. The tiny delicate sharp crystals appear in a mask shape, gradually taking over so she is fully covered. The look is inspired by McGrath’s makeup from the Givenchy Spring/Summer 2014 show.

Joan Smalls: Smoke

Joan’s look is inspired by the movement of a smoke electric storm and the northern lights. Joan emerges from the page to the sound of her taking a deep breath. Purple smoke FX continues to build and swirl around her three dimensional head.

The Process

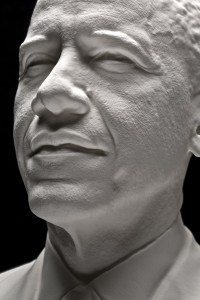

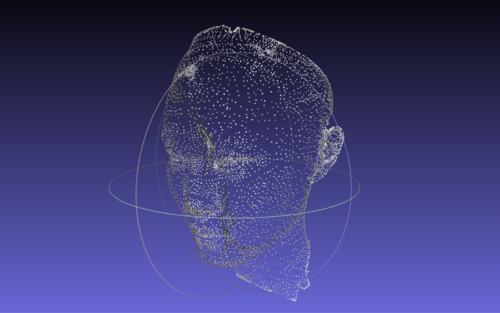

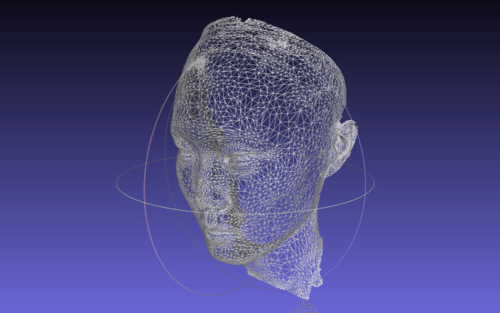

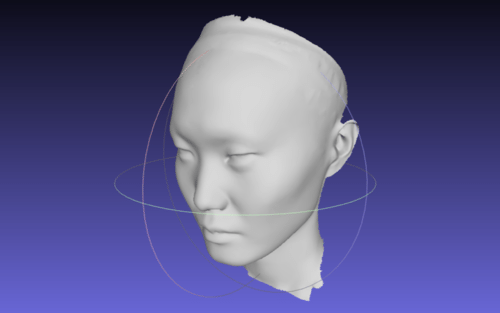

The idea was that each model would be a breathing, living organism consumed by the nature of the VFX. After Phil shot the models with bare makeup, SCANable captured a 3D scan on-set to generate full body CG models for post production. This allowed The Mill’s 3D team, led by Raymond Leung, to retouch and create geometry for the app.

The Mill design team then outlined the designs on top of the retouched photographs with style frames. After a final cropping & editing session with Phil, the high res prints were sent to press.

The next step was to convert the ideas from the print component into the AR app. Garage Magazine had previously teamed up with artist Jeff Koons, creating a cover where, when using the Garage app, viewers were able to walk around a virtual sculpture. The Mill team pushed the technology further for Issue Nº8 with animation, sound and additional elements.

For the app execution, in-house 2D and 3D tools were used to create FX simulations, animations and final composites. Many of the initial ideas were limited by the technology used in real-time applications and a lack of processing power, which meant the team needed to get creative.

Kendall’s effect was particularly challenging as her execution utilized disciplines across the CG department. We were up against resolution restrictions within the technology. Each strand grown was a culmination of particle effects, animation, texturing, modeling and lighting. But with some clever ingenuity, and an indication process of constant testing, updating, and retesting, her incredible look was achieved. This was the approach taken for the look of each of the models.

Unique music scores by Alex Da Kid and sound FX by Finger Music were incorporated to accompany each execution, building an even stronger interactive experience. The last step was integrating all finished animations into the actual app, done by Meiré & Meiré.

This issue of Garage is currently on stands and available for purchase online. You can download the app here and watch these beauties come to life.