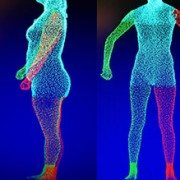

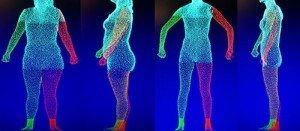

LAKE MARY, Fla., Jan. 7, 2015 /PRNewswire/ — FARO Technologies, Inc. (NASDAQ: FARO), the world’s most trusted source for 3D measurement, imaging, and realization technology, announces the release of the new FARO Freestyle3D Handheld Laser Scanner, an easy, intuitive device for use in Architecture, Engineering and Construction (AEC), Law Enforcement, and other industries.

The FARO Freestyle3D is equipped with a Microsoft Surface™ tablet and offers unprecedented real-time visualization by allowing the user to view point cloud data as it is captured. The Freestyle3D scans to a distance of up to three (3) meters and captures up to 88K points per second with accuracy better than 1.5mm. The patent-pending, self-compensating optical system also allows users to start scanning immediately with no warm up time required.

“The Freestyle3D is the latest addition to the FARO 3D laser scanning portfolio and represents another step on our journey to democratize 3D scanning,” stated Jay Freeland, FARO’s President and CEO. “Following the successful adoption of our Focus scanners for long-range scanning, we’ve developed a scanner that provides customers with the same intuitive feel and ease-of-use in a handheld device.”

The portability of Freestyle3D enables users to maneuver and scan in tight and hard-to-reach areas such as car interiors, under tables and behind objects making it ideal for crime scene data collection or architectural preservation and restoration activities. Memory-scan technology enables Freestyle3D users to pause scanning at any time and then resume data collection where they left off without the use of artificial targets.

Mr. Freeland added, “FARO’s customers continue to stress the importance of work-flow simplicity, portability, and affordability as key drivers to their continued use and adoption of 3D laser scanning. We have responded by developing an easy-to-use, industrial grade, handheld laser scanning device that weighs less than 2 lbs.”

The Freestyle3D can be employed as a standalone device to scan areas of interest, or used in concert with FARO’s Focus X 130 / X 330 scanners. Point cloud data from all of these devices can be seamlessly integrated and shared with all of FARO’s software visualization tools including FARO SCENE, WebShare Cloud, and FARO CAD Zone packages.

For more information about FARO’s 3D scanning solutions visit: www.faro.com

This press release contains forward-looking statements within the meaning of the Private Securities Litigation Reform Act of 1995 that are subject to risks and uncertainties, such as statements about demand for and customer acceptance of FARO’s products, and FARO’s product development and product launches. Statements that are not historical facts or that describe the Company’s plans, objectives, projections, expectations, assumptions, strategies, or goals are forward-looking statements. In addition, words such as “is,”“will,” and similar expressions or discussions of FARO’s plans or other intentions identify forward-looking statements. Forward-looking statements are not guarantees of future performance and are subject to various known and unknown risks, uncertainties, and otherfactors that may cause actual results, performances, or achievements to differ materially from future results, performances, or achievements expressed or implied by such forward-looking statements. Consequently, undue reliance should not be placed on these forward-looking statements.

Factors that could cause actual results to differ materially from what is expressed or forecasted in such forward-looking statements include, but are not limited to:

- development by others of new or improved products, processes or technologies that make the Company’s products less competitive or obsolete;

- the Company’s inability to maintain its technological advantage by developing new products and enhancing its existing products;

- declines or other adverse changes, or lack of improvement, in industries that the Company serves or the domestic and international economies in the regions of the world where the Company operates and other general economic, business, and financial conditions; and

- other risks detailed in Part I, Item 1A. Risk Factors in the Company’s Annual Report on Form 10-K for the year ended December 31, 2013 and Part II, Item 1A. Risk Factors in the Company’s Quarterly Report on Form 10-Q for the quarter ended June 28, 2014.

Forward-looking statements in this release represent the Company’s judgment as of the date of this release. The Company undertakes no obligation to update publicly any forward-looking statements, whether as a result of new information, future events, or otherwise, unless otherwise required by law.

About FARO

FARO is the world’s most trusted source for 3D measurement technology. The Company develops and markets computer-aided measurement and imaging devices and software. Technology from FARO permits high-precision 3D measurement, imaging and comparison of parts and complex structures within production and quality assurance processes. The devices are used for inspecting components and assemblies, rapid prototyping, documenting large volume spaces or structures in 3D, surveying and construction, as well as for investigation and reconstruction of accident sites or crime scenes.

Approximately 15,000 customers are operating more than 30,000 installations of FARO’s systems, worldwide. The Company’s global headquarters is located in Lake Mary, FL; its European regional headquarters in Stuttgart, Germany; and its Asia/Pacific regional headquarters in Singapore. FARO has other offices in the United States, Canada, Mexico, Brazil, Germany, the United Kingdom,France, Spain, Italy, Poland, Turkey, the Netherlands, Switzerland, Portugal, India, China, Malaysia, Vietnam, Thailand, South Korea, and Japan.

More information is available at http://www.faro.com

SOURCE FARO Technologies, Inc.