3D Sensing Tablets Aims To Replace Multiple Surveyor Tools

Source: Tech Crunch

As we reported earlier this year, Google is building a mobile device with 3D sensing capabilities — under the Project Tango moniker. But it’s not the only company looking to combine 3D sensing with mobility.

Spanish startup E-Capture R&D is building a tablet with 3D sensing capabilities that’s aiming to target the enterprise space — for example as a portable tool for surveyors, civil engineers, architects and the like — which is due to go on sale at the beginning of 2015.

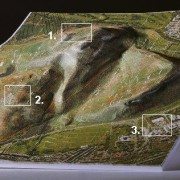

The tablet, called EyesMap, will have two rear 13 megapixel cameras, along with a depth sensor and GPS to enable it to measure co-ordinates, surface and volumes of objects up to a distance of 70 to 80 meters in real-time.

So, for instance, it could be used to capture measurements of – or create a 3D model of — a bridge or a building from a distance. Or to model objects as small as insects so it could be used to 3D scan individual components by civil engineers, for instance.

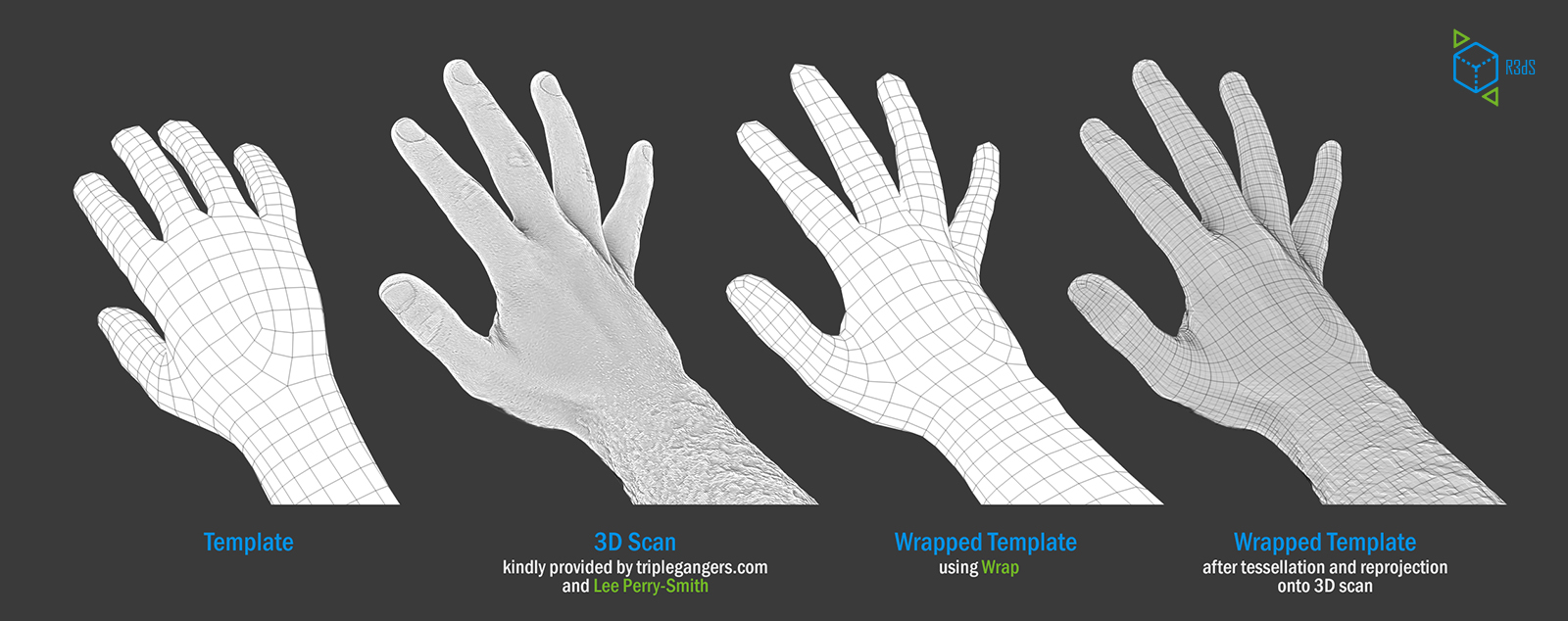

Its makers claim it can build high-resolution models with HD realistic textures.

EyesMap uses photogrammetry to ensure accurate measurements and to build outdoor 3D models, but also has an RGBD sensor for indoor scanning.

The tablet will apparently be able to scan an “advanced photogrammetric picture” with up to 4 million dots in around 2 minutes. It will also be able to capture 3D objects in motion. It’s using a blend of computer vision techniques, photogrammetry, visual odometer, “precision sensor fine tuning” and other image measuring techniques, say its makers.

The tablet will apparently be able to scan an “advanced photogrammetric picture” with up to 4 million dots in around 2 minutes. It will also be able to capture 3D objects in motion. It’s using a blend of computer vision techniques, photogrammetry, visual odometer, “precision sensor fine tuning” and other image measuring techniques, say its makers.

E-Capture was founded back in April 2012 by a group of experienced surveyors and Pedro Ortiz-Coder, a researcher in the laser scanning and photogrammetry field. The business has been founder funded thus far, but has also received a public grant of €800,000 to help with development.

In terms of where EyesMap fits into the existing enterprise device market, Ortiz-Coder says it’s competing with multiple standalone instruments in the survey field — such as 3D scanners, telemeters, photogrammetry software and so on — but is bundling multiple functions into a single portable device.

“To [survey small objects], a short range laser scanner is required but, a short-range LS cannot capture big or far away objects. That’s why we thought to create a definitive instrument, which permits the user to scan small objects, indoors, buildings, big objects and do professional works with a portable device,” he tells TechCrunch.

“Moreover, there wasn’t in the market any instrument which can measure objects in motion accurately more than 3-4 meters. EyesMap can measure people, animals, objects in motion in real time with a high range distance.”

The tablet will run Windows and, on the hardware front, will have Intel’s 4th generation i7 processor and 16 GB of RAM. Pricing for the EyesMap slate has not yet been announced.

Another 3D mobility project we previously covered, called LazeeEye, was aiming to bring 3D sensing smarts to any smartphone via an add on device (using just RGBD sensing) — albeit that project fell a little short of its funding goal on Kickstarter.

Also in the news recently, Mantis Vision raising $12.5 million in funding from Qualcomm Ventures, Samsung and others for its mobile 3D capture engine that’s designed to work on handheld devices.

There’s no denying mobile 3D as a space is heating up for device makers, although it remains to be seen how slick the end-user applications end up being — and whether they can capture the imagination of mainstream mobile users or, as with E-Capture’s positioning, carve out an initial user base within niche industries.

FRANKFURT, Germany, November 29, 2012 /PRNewswire/ —

FRANKFURT, Germany, November 29, 2012 /PRNewswire/ —