Leica Geosystems and Autodesk Announce BLK360 $16k 3D Laser Scanner

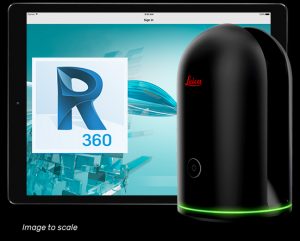

Leica Geosystems Announces Complete Imaging Solution: Leica BLK360 Imaging Laser Scanner and Autodesk ReCap 360 Pro App

Las Vegas, November 16th, 2016, Leica Geosystems announced the BLK360, a revolutionary miniaturized black 3D imaging laser scanner. The product was revealed at Autodesk University 2016 and will be bundled with Autodesk’s ReCap 360 Pro and the new ReCap 360 Pro app for iPad. Both companies will demonstrate the product for the duration of the conference at the ReCap booth #2033 and the Leica Geosystems booth #1537.

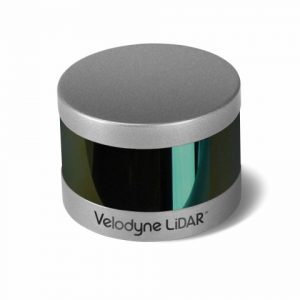

The BLK360 captures the world around you with full-color panoramic images overlaid on a high accuracy point cloud. The one-button Leica BLK360 is not only the smallest and lightest of its kind, but also offers a simple user experience. Anyone who can operate an iPad can now capture the world around them with high resolution 3D panoramic images.

The Leica BLK360 defines a new category: the imaging laser scanner. It is so small and light that it fits in a typical messenger bag and can be carried almost anywhere. It features a 60 meter measurement range for full dome scans. A complete full-dome laser scan, 3D panoramic image capture and transfer to the iPad Pro takes only 3 minutes.

Using the ReCap Pro 360 mobile app, the BLK360 streams image and point cloud data to iPad. The app filters and registers scan data in real-time. After capture, ReCap 360 Pro enables for point cloud data transfer to a number of CAD, BIM, VR and AR applications. The integration of BLK360 and Autodesk software will dramatically streamline the reality capture process thereby opening this technology to non-surveying individuals.

Using the ReCap Pro 360 mobile app, the BLK360 streams image and point cloud data to iPad. The app filters and registers scan data in real-time. After capture, ReCap 360 Pro enables for point cloud data transfer to a number of CAD, BIM, VR and AR applications. The integration of BLK360 and Autodesk software will dramatically streamline the reality capture process thereby opening this technology to non-surveying individuals.

“When Autodesk first introduced ReCap, it was for one purpose: the democratization of reality capture,” said Aaron Morris, who oversees reality solutions at Autodesk. “We saw the tremendous power of this technology for the AEC industry, but realized that the cost and portability of scanners combined with difficult-to-use data was limiting the adoption of reality capture. Autodesk’s collaboration with Leica Geosystems helps solve these issues by giving just about anyone access to the amazing advantages of reality data.”

“As the leader in the spatial measurement arena, we recognized the gap between Leica Geosystems’ scientific-grade 3D laser scanners and emerging camera and handheld technologies, and set out to bring reality capture to everyone,” said Dr. Burkhard Boeckem, CTO of Hexagon Geosystems. “By combining and miniaturizing technologies available within Hexagon, the BLK360 defines a new category: the Imaging Laser Scanner. It is significantly smaller and lighter (1 kg) than any comparable device on the market. As we developed the ultimate sensor, we worked with Autodesk to create new software and ultimately achieved the next milestone in 3D reality capture. Together with Autodesk’s ReCap 360 Pro, the Leica BLK360 empowers every AEC professional to realize the benefits gained by incorporating high resolution 360° imagery and 3D laser scan data in their daily work.”

BLK360 & Autodesk ReCap 360 Pro Bundle will be available to order in March 2017. The anticipated bundle suggested retail price is $15,990/€15,000, which includes: BLK360 Scanner, Case, Battery, Charger and an annual subscription to ReCap 360 Pro. For customers who want to secure their spot in line to receive the first batch of BLK360 laser scanners, Autodesk and Leica Geosystems are offering a special limited promotion for a discounted three-year ReCap 360 Pro subscription with a voucher giving priority access to buy the BLK360. Go to this link to learn more.

Leica Geosystems – when it has to be right

Revolutionizing the world of measurement and survey for nearly 200 years, Leica Geosystems creates complete solutions for professionals across the planet. Known for premium products and innovative solution development, professionals in a diverse mix of industries, such as aerospace and defense, safety and security, construction, and manufacturing, trust Leica Geosystems for all their geospatial needs. With precise and accurate instruments, sophisticated software, and trusted services, Leica Geosystems delivers value every day to those shaping the future of our world.

Leica Geosystems is part of Hexagon (Nasdaq Stockholm: HEXA B; hexagon.com), a leading global provider of information technologies that drive quality and productivity improvements across geospatial and industrial enterprise applications.

Autodesk, the Autodesk logo, Autodesk ReCap 360, and Autodesk ReCap 360 Pro are registered trademarks or trademarks of Autodesk, Inc., and/or its subsidiaries and/or affiliates in the USA and/or other countries. All other brand names, product names or trademarks belong to their respective holders. Autodesk reserves the right to alter product and services offerings, and specifications and pricing at any time without notice, and is not responsible for typographical or graphical errors that may appear in this document.