Hennessy Launches “Harmony. Mastered from Chaos.” Interactive Campaign using LiDAR Scans

NEW YORK, June 30, 2016 /PRNewswire/ — Hennessy, the world’s #1 Cognac, today announced “Harmony. Mastered from Chaos.” –a dynamic new campaign that brings to life the multitude of complex variables that are artfully and expertly mastered by human touch to create the brand’s most harmonious blend, V.S.O.P Privilège. Set to launch June 30th, the campaign showcases the absolute mastery exuded at every stage of crafting this blend. This first campaign in over ten years also offers a glimpse into the inner workings of Hennessy’s mysterious Comité de Dégustation (Tasting Committee)—perhaps the ideal example of Hennessy’s mastery—that crafts the same rich, high quality liquid year over year. Narrated by Leslie Odom, Jr., the campaign features 60, 30 and 15 second digital spots and an interactive digital experience, adding another vivid chapter to the brand’s “Never stop. Never settle.” platform.

“Sharing the intriguing story of the Hennessy Tasting Committee, its exacting practices and long standing rituals, illustrates the crucial role that over 250 years of tradition and excellence play in mastering this well-structured spirit,” said Giles Woodyer, Senior Vice President, Hennessy US. “With more and more people discovering Cognac and seeking out the heritage behind brands, we knew it was the right time to launch the first significant marketing campaign for V.S.O.P Privilège.”

Hennessy’s Comité de Dégustation is a group of seven masters, including seventh generation Master Blender, Yann Fillioux, unparalleled in the world of Cognac. These architects of time oversee the eaux-de-vie to ensure that every bottle of V.S.O.P Privilège is perfectly balanced despite the many intricate variables present during creation of the Cognac. From daily tastings at exactly 11am in the Grand Bureau (whose doors never open to the public) to annual tastings of the entire library of Hennessy eaux-de-vie (one of the largest and oldest in the world), this august body meticulously safeguards the future of Hennessy, its continuity and legacy.

Through a perfectly orchestrated phalanx marked by an abundance of tradition, caring and human touch, V.S.O.P Privilège is created as a complete and harmonious blend: the definitive expression of a perfectly balanced Cognac. Based on a selection of firmly structured eaux-de-vie, aged largely in partially used barrels in order to take on subtle levels of oak tannins, this highly characterful Cognac reveals balanced aromas of fresh vanilla, cinnamon and toasty notes, all coming together with a seamless perfection.

“Harmony. Mastered from Chaos.”

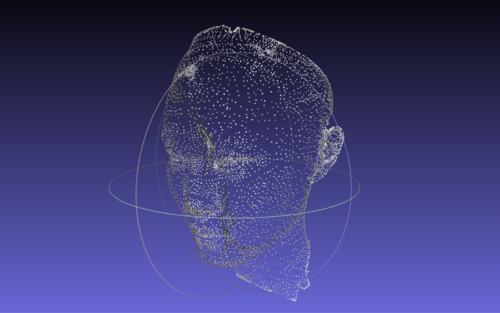

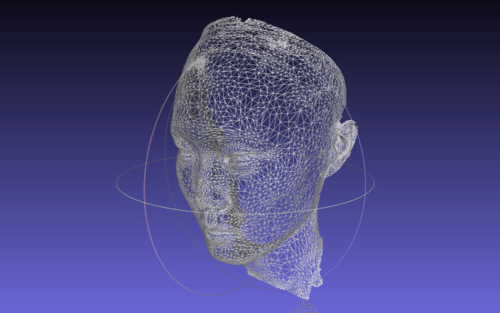

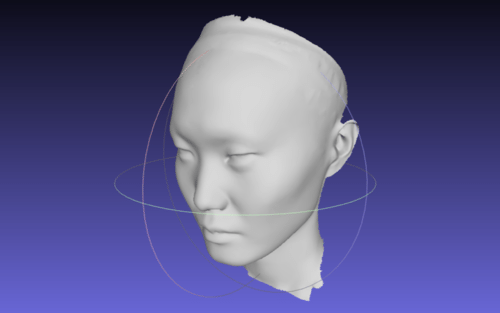

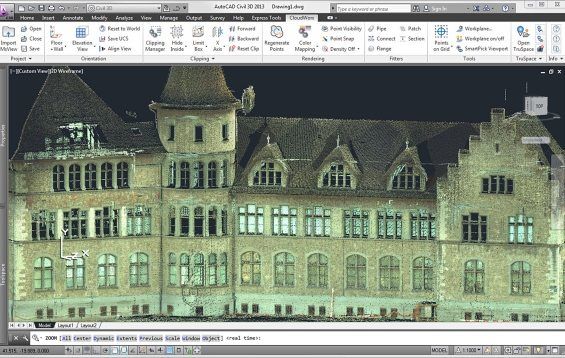

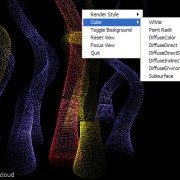

In partnership with Droga5, the film and interactive experience were directed by Ben Tricklebank of Tool of North America, and Active Theory, a Los Angeles-based interactive studio. From the vineyards in Cognac, France, to the distillery and Cognac cellars, viewers are taken on a powerful and modern cinematic journey to experience the scrupulous process of crafting Hennessy VSOP Privilège. The multidimensional campaign uses a combination of live-action footage and technology, including 3D lidar scanning, depth capture provided by SCANable, and binaural recording to visualize the juxtaposition of complexity versus mastery that is critical to the Hennessy V.S.O.P Privilège Cognac-making process.

“Harmony. Mastered from Chaos.” will be supported by a fully integrated marketing campaign including consumer events, retail tastings, social and PR initiatives. Consumers will be able to further engage with the brand through the first annual “Cognac Classics Week” hosted by Liquor.com, taking place July 11-18 to demonstrate the harmony that V.S.O.P Privilège adds to classic cocktails. Kicking off on Bastille Day in a nod to Hennessy’s French heritage, mixologists across New York City, Chicago, and Los Angeles will offer new twists on classics such as the French 75, Sidecar, and Sazerac, all crafted with the perfectly balanced V.S.O.P Privilège.

For more information on Cognac Classics Week, including a list of participating bars and upcoming events, visitwww.Liquor.com/TBD and follow the hashtag #CognacClassicsWeek.

To learn more about “Harmony. Mastered from Chaos.” visit Hennessy.com or Facebook.com/Hennessy.

ABOUT HENNESSY

In 2015, the Maison Hennessy celebrated 250 years of an exceptional adventure that has lasted for seven generations and spanned five continents.

It began in the French region of Cognac, the seat from which the Maison has constantly passed down the best the land has to give, from one generation to the next. In particular, such longevity is thanks to those people, past and present, who have ensured Hennessy’s success both locally and around the world. Hennessy’s success and longevity are also the result of the values the Maison has upheld since its creation: unique savoir-faire, a constant quest for innovation, and an unwavering commitment to Creation, Excellence, Legacy, and Sustainable Development. Today, these qualities are the hallmark of a House – a crown jewel in the LVMH Group – that crafts the most iconic, prestigious Cognacs in the world.

Hennessy is imported and distributed in the U.S. by Moët Hennessy USA. Hennessy distills, ages and blends spanning a full range: Hennessy V.S, Hennessy Black, V.S.O.P Privilège, X.O, Paradis, Paradis Impérial and Richard Hennessy. For more information and where to purchase/ engrave, please visit Hennessy.com.

Video – https://youtu.be/vp5e8YV0pjc

Photo – http://photos.prnewswire.com/prnh/20160629/385105

Photo – http://photos.prnewswire.com/prnh/20160629/385106

SOURCE Hennessy

Directable characters

Directable characters

Our industry never comes short in the innovation department.

Our industry never comes short in the innovation department.