Capturing Real-World Environments for Virtual Cinematography

[source] written by Matt Workman

Virtual Reality Cinematography

As Virtual Reality HMDs (Oculus) come speeding towards consumers, there is an emerging need to capture 360 media and 360 environments. Capturing a location for virtual reality or virtual production is a task that is well suited for a DP and maybe a new niche of cinematography/photography. Not only are we capturing the physical dimensions of the environment using LIDAR, but we capturing the lighting using 360 degree HDR light probes captured with DSLRs/Nodal Tripod systems.

A LIDAR scanner is essentially a camera that shoots in all directions. It lives on a tripod and it can record the physical dimensions and color of an environment/space. It captures millions of points and saves their position and color to be later used to construct the space digitally.

Using a DSLR camera and a nodal tripod head, the DP would capture High Dynamic Range (32bit float HDR) 360 degree probes of the location, to record the lighting. This process would essentially capture the lighting in the space at a VERY high dynamic range and that would be later reprojected onto the geometry constructed using the LIDAR data.

The DP is essentially lighting the entire space in 360 degrees and then capturing it. Imagine an entire day of lighting a space in all directions. Lights outside windows, track lighting on walls, practicals, etc. Then capturing that space using the above outlined techniques as an asset to be used later. Once the set is constructed virtually, the director can add actors/props and start filmmaking, like he/she would do on a real set. And the virtual cinematographer would line up the shots, cameras moves, and real time lighting.

I’ve already encountered a similar paradigm as a DP, when I shot a 360 VR commercial. A few years ago I shot a commercial for Bacardi with a 360 VR camera and we had to light and block talent in all directions within a loft space. The end user was then able to control which way the camera looked in the web player, but the director/DP controlled it’s travel path.

360 Virtual Reality Bacardi Commercial

http://www.mattworkman.com/2012/03/18/bacardi-360-virtual-reality/

Capturing a set for VR cinematography would allow the user to control their position in the space as well as which way they were facing. And the talent and interactive elements would be added later.

Final Product: VR Environment Capture

In this video you can see the final product of a location captured for VR. The geometry for the set was created using the LIDAR as a reference. The textures and lighting data are baked in from a combination of the LIDAR color data and the reprojected HDR probes.

After all is said in done, we have captured a location, it’s textures, and it’s lighting that can be used a digital location however we need. For previs, virtual production, background VFX plates, a real time asset for Oculus, etc.

SIGGRAPH 2014 and NVIDIA

SG4141: Building Photo-Real Virtual Reality from Real Reality, Byte by Byte

http://www.ustream.tv/recorded/51331701

In this presentation Scott Metzger speaks about his new virtual reality company Nurulize and his work with the Nvidia K5200 GPU and The Foundry’s Mari to create photo real 360 degree environments. He shows a demo of the environment that was captured in 32bit float with 8k textures being played in real time on an Oculus Rift and the results speak for themselves. (The real time asset was down sampled to 16bit EXR)

Some key technologies mentioned were the development of virtual texture engines that allow objects to have MANY 8k textures at once using the UDIM model. The environment’s lighting was baked from V-Ray 3 to a custom UDIM Unity shader and supported by Amplify Creations beta Unity Plug-in.

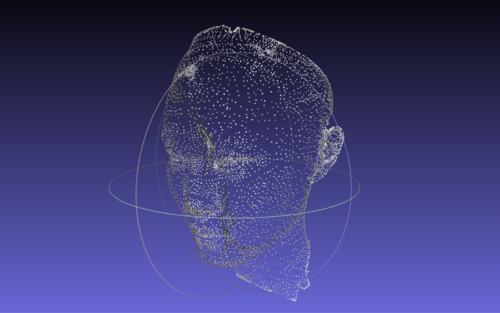

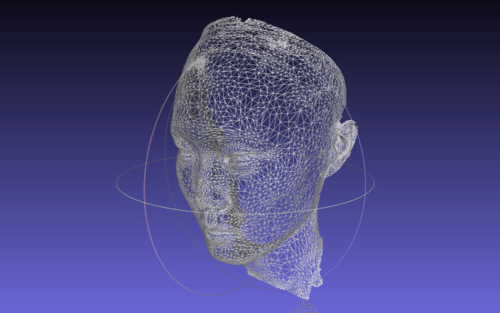

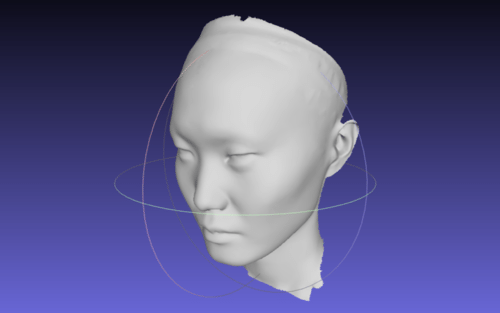

The actors were scanned in using xxArray photogrammetry system and Mari was used to project the high resolution textures. All of this technology was being enabled by Nvidia’s Quadro GPU line, to allow fast 8k texture buffering. The actors were later imported in to the real time environment that had been captured and were viewable from all angles through an Oculus Rift HMD.

Virtual Reality Filmmaking

Scott brings up some incredibly relevant and important questions about virtual reality for filmmakers (directors/DPs) who plan to work in virtual reality.

- How do you tell a story in Virtual Reality?

- How do you direct the viewer to face a certain direction?

- How do you create a passive experience on the Oculus?

He even give a glimpse at the future distribution model of VR content. His demo for the film Rise will be released for Oculus/VR in the following formats:

- A free roam view where the action happens and the viewer is allowed to completely control the camera and point of view.

- A directed view where the viewer and look around but the positioning is dictated by the script/director. This model very much interests me and sounds like a video game.

- And a tradition 2D post rendered version. Like a tradition cinematic or film, best suited for Vimeo/Youtube/DVD/TV.

A year ago this technology seemed like science fiction, but every year we come closer to completely capturing humans (form/texture), their motions, environments with their textures, real world lighting, and viewing them in real time in virtual reality.

The industry is evolving at an incredibly rapid pace and so must the creatives working in it. Especially the persons responsible for the camera and the lighting, the director of photography.