Matthew McConaughey, Woody Harrelson Revive ‘True Detective’ Roles to Call for Filming in Texas: ‘Hollywood Is a Flat Circle’

/0 Comments/in Blog, Featured, LiDAR, Mobile Scanning, Modeling, Photogrammetry, Uncategorized, Visual Effects (VFX)/by Ty TaylorHere are all the nominees for the 23rd Annual VES Awards

/0 Comments/in Blog, Featured, LiDAR, Mobile Scanning, Modeling, Photogrammetry, Uncategorized, Visual Effects (VFX)/by Ty TaylorSCANable Strengthens Existing West Coast Presence

/0 Comments/in Blog, Featured, LiDAR, Mobile Scanning, Modeling, Photogrammetry, Uncategorized, Visual Effects (VFX)/by Ty TaylorMoPho Studios announced by the leading VFX 3D Scanning Studio – SCANable

/0 Comments/in Blog, Featured, LiDAR, Mobile Scanning, Modeling, Photogrammetry, Uncategorized, Visual Effects (VFX)/by Ty TaylorPinewood Atlanta Studios, Home to ‘Avengers: Endgame,’ Rebrands as Trilith Studios

/0 Comments/in Blog, Featured, In the News, LiDAR, Photogrammetry, Point Cloud, Visual Effects (VFX)/by Ty TaylorFire, Prosthetics, LED’s and VFX: ‘Project Power’

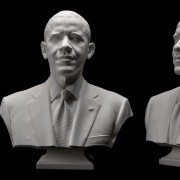

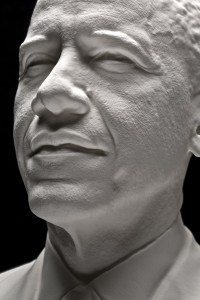

/0 Comments/in Blog, Featured, In the News, LiDAR, Photogrammetry, Point Cloud, Visual Effects (VFX)/by Ty TaylorSmithsonian Displays 3D Portrait of President Obama

/0 Comments/in 3D Printing, In the News, Photogrammetry, Uncategorized/by Travis ReinkeThe first presidential portraits created from 3-D scan data are now on display in the Smithsonian Castle. The portraits of President Barack Obama were created based on data collected by a Smithsonian-led team of 3-D digital imaging specialists and include a digital and 3-D printed bust and life mask. A new video released today by the White House details the behind-the-scenes process of scanning, creating and printing the historic portraits. The portraits will be on view in the Commons gallery of the Castle starting today, Dec. 2, through Dec. 31. The portraits were previously displayed at the White House Maker Faire June 18.

The Smithsonian-led team scanned the President earlier this year using two distinct 3-D documentation processes. Experts from the University of Southern California’s Institute for Creative Technologies used their Light Stage face scanner to document the President’s face from ear to ear in high resolution. Next, a Smithsonian team used handheld 3-D scanners and traditional single-lens reflex cameras to record peripheral 3-D data to create an accurate bust.

The data captured was post-processed by 3-D graphics experts at the software company Autodesk to create final high-resolution models. The life mask and bust were then printed using 3D Systems’ Selective Laser Sintering printers.

The data and the printed models are part of the collection of the Smithsonian’s National Portrait Gallery. The Portrait Gallery’s collection has multiple images of every U.S. president, and these portraits will support the current and future collection of works the museum has to represent Obama.

The life-mask scan of Obama joins only three other presidential life masks in the Portrait Gallery’s collection: one of George Washington created by Jean-Antoine Houdon and two of Abraham Lincoln created by Leonard Wells Volk (1860) and Clark Mills (1865). The Washington and Lincoln life masks were created using traditional plaster-casting methods. The Lincoln life masks are currently available to explore and download on the Smithsonian’s X 3D website.

The video below shows an Artec Eva being used to capture a 3D portrait of President Barack Obama along with Mobile Light Stage – in essence, eight high-end DSLRs and 50 light sources mounted in a futuristic-looking quarter-circle of aluminum scaffolding. During a facial scan, the cameras capture 10 photographs each under different lighting conditions for a total of 80 photographs. All of this happened in a single second. Afterwards, sophisticated algorithms processed this data into high-resolution 3D models. The Light Stage captured the President’s facial features from ear to ear, similar to the 1860 Lincoln life mask.

About Smithsonian X 3D

The Smithsonian publicly launched its 3-D scanning and imaging program Smithsonian X 3D in 2013 to make museum collections and scientific specimens more widely available for use and study. The Smithsonian X 3D Collection features objects from the Smithsonian that highlight different applications of 3-D capture and printing, as well as digital delivery methods for 3-D data in research, education and conservation. Objects include the Wright Flyer, a model of the remnants of supernova Cassiopeia A, a fossil whale and a sixth-century Buddha statue. The public can explore all these objects online through a free custom-built, plug-in browser and download the data for their own use in modeling programs or to print using a 3-D printer.

Capturing Real-World Environments for Virtual Cinematography

/0 Comments/in 3D Laser Scanning, LiDAR, Photogrammetry, Visual Effects (VFX)/by Travis Reinke[source] written by Matt Workman

Virtual Reality Cinematography

As Virtual Reality HMDs (Oculus) come speeding towards consumers, there is an emerging need to capture 360 media and 360 environments. Capturing a location for virtual reality or virtual production is a task that is well suited for a DP and maybe a new niche of cinematography/photography. Not only are we capturing the physical dimensions of the environment using LIDAR, but we capturing the lighting using 360 degree HDR light probes captured with DSLRs/Nodal Tripod systems.

A LIDAR scanner is essentially a camera that shoots in all directions. It lives on a tripod and it can record the physical dimensions and color of an environment/space. It captures millions of points and saves their position and color to be later used to construct the space digitally.

Using a DSLR camera and a nodal tripod head, the DP would capture High Dynamic Range (32bit float HDR) 360 degree probes of the location, to record the lighting. This process would essentially capture the lighting in the space at a VERY high dynamic range and that would be later reprojected onto the geometry constructed using the LIDAR data.

The DP is essentially lighting the entire space in 360 degrees and then capturing it. Imagine an entire day of lighting a space in all directions. Lights outside windows, track lighting on walls, practicals, etc. Then capturing that space using the above outlined techniques as an asset to be used later. Once the set is constructed virtually, the director can add actors/props and start filmmaking, like he/she would do on a real set. And the virtual cinematographer would line up the shots, cameras moves, and real time lighting.

I’ve already encountered a similar paradigm as a DP, when I shot a 360 VR commercial. A few years ago I shot a commercial for Bacardi with a 360 VR camera and we had to light and block talent in all directions within a loft space. The end user was then able to control which way the camera looked in the web player, but the director/DP controlled it’s travel path.

360 Virtual Reality Bacardi Commercial

http://www.mattworkman.com/2012/03/18/bacardi-360-virtual-reality/

Capturing a set for VR cinematography would allow the user to control their position in the space as well as which way they were facing. And the talent and interactive elements would be added later.

Final Product: VR Environment Capture

In this video you can see the final product of a location captured for VR. The geometry for the set was created using the LIDAR as a reference. The textures and lighting data are baked in from a combination of the LIDAR color data and the reprojected HDR probes.

After all is said in done, we have captured a location, it’s textures, and it’s lighting that can be used a digital location however we need. For previs, virtual production, background VFX plates, a real time asset for Oculus, etc.

SIGGRAPH 2014 and NVIDIA

SG4141: Building Photo-Real Virtual Reality from Real Reality, Byte by Byte

http://www.ustream.tv/recorded/51331701

In this presentation Scott Metzger speaks about his new virtual reality company Nurulize and his work with the Nvidia K5200 GPU and The Foundry’s Mari to create photo real 360 degree environments. He shows a demo of the environment that was captured in 32bit float with 8k textures being played in real time on an Oculus Rift and the results speak for themselves. (The real time asset was down sampled to 16bit EXR)

Some key technologies mentioned were the development of virtual texture engines that allow objects to have MANY 8k textures at once using the UDIM model. The environment’s lighting was baked from V-Ray 3 to a custom UDIM Unity shader and supported by Amplify Creations beta Unity Plug-in.

The actors were scanned in using xxArray photogrammetry system and Mari was used to project the high resolution textures. All of this technology was being enabled by Nvidia’s Quadro GPU line, to allow fast 8k texture buffering. The actors were later imported in to the real time environment that had been captured and were viewable from all angles through an Oculus Rift HMD.

Virtual Reality Filmmaking

Scott brings up some incredibly relevant and important questions about virtual reality for filmmakers (directors/DPs) who plan to work in virtual reality.

- How do you tell a story in Virtual Reality?

- How do you direct the viewer to face a certain direction?

- How do you create a passive experience on the Oculus?

He even give a glimpse at the future distribution model of VR content. His demo for the film Rise will be released for Oculus/VR in the following formats:

- A free roam view where the action happens and the viewer is allowed to completely control the camera and point of view.

- A directed view where the viewer and look around but the positioning is dictated by the script/director. This model very much interests me and sounds like a video game.

- And a tradition 2D post rendered version. Like a tradition cinematic or film, best suited for Vimeo/Youtube/DVD/TV.

A year ago this technology seemed like science fiction, but every year we come closer to completely capturing humans (form/texture), their motions, environments with their textures, real world lighting, and viewing them in real time in virtual reality.

The industry is evolving at an incredibly rapid pace and so must the creatives working in it. Especially the persons responsible for the camera and the lighting, the director of photography.

3D Sensing Tablets Aims To Replace Multiple Surveyor Tools

/0 Comments/in 3D Laser Scanning, Mobile Scanning, New Technology, Photogrammetry, Point Cloud/by Travis Reinke

Source: Tech Crunch

As we reported earlier this year, Google is building a mobile device with 3D sensing capabilities — under the Project Tango moniker. But it’s not the only company looking to combine 3D sensing with mobility.

Spanish startup E-Capture R&D is building a tablet with 3D sensing capabilities that’s aiming to target the enterprise space — for example as a portable tool for surveyors, civil engineers, architects and the like — which is due to go on sale at the beginning of 2015.

The tablet, called EyesMap, will have two rear 13 megapixel cameras, along with a depth sensor and GPS to enable it to measure co-ordinates, surface and volumes of objects up to a distance of 70 to 80 meters in real-time.

So, for instance, it could be used to capture measurements of – or create a 3D model of — a bridge or a building from a distance. Or to model objects as small as insects so it could be used to 3D scan individual components by civil engineers, for instance.

Its makers claim it can build high-resolution models with HD realistic textures.

EyesMap uses photogrammetry to ensure accurate measurements and to build outdoor 3D models, but also has an RGBD sensor for indoor scanning.

E-Capture was founded back in April 2012 by a group of experienced surveyors and Pedro Ortiz-Coder, a researcher in the laser scanning and photogrammetry field. The business has been founder funded thus far, but has also received a public grant of €800,000 to help with development.

In terms of where EyesMap fits into the existing enterprise device market, Ortiz-Coder says it’s competing with multiple standalone instruments in the survey field — such as 3D scanners, telemeters, photogrammetry software and so on — but is bundling multiple functions into a single portable device.

“To [survey small objects], a short range laser scanner is required but, a short-range LS cannot capture big or far away objects. That’s why we thought to create a definitive instrument, which permits the user to scan small objects, indoors, buildings, big objects and do professional works with a portable device,” he tells TechCrunch.

“Moreover, there wasn’t in the market any instrument which can measure objects in motion accurately more than 3-4 meters. EyesMap can measure people, animals, objects in motion in real time with a high range distance.”

The tablet will run Windows and, on the hardware front, will have Intel’s 4th generation i7 processor and 16 GB of RAM. Pricing for the EyesMap slate has not yet been announced.

Another 3D mobility project we previously covered, called LazeeEye, was aiming to bring 3D sensing smarts to any smartphone via an add on device (using just RGBD sensing) — albeit that project fell a little short of its funding goal on Kickstarter.

Also in the news recently, Mantis Vision raising $12.5 million in funding from Qualcomm Ventures, Samsung and others for its mobile 3D capture engine that’s designed to work on handheld devices.

There’s no denying mobile 3D as a space is heating up for device makers, although it remains to be seen how slick the end-user applications end up being — and whether they can capture the imagination of mainstream mobile users or, as with E-Capture’s positioning, carve out an initial user base within niche industries.

Quick Pages

Recent Productions

Latest News

- A $1.5B Investment in Texas’ Film is Now LawJune 23, 2025 - 4:49 pm

- Daredevil: Born Again: the art and craft of critical VFX collaborationMay 3, 2025 - 1:58 pm

- Here are all the nominees for the 23rd Annual VES AwardsJanuary 15, 2025 - 6:58 pm