Summary

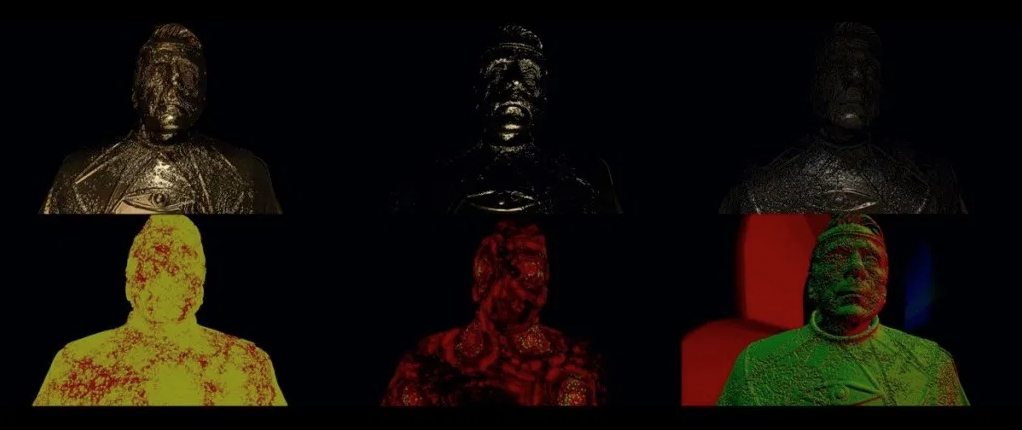

PIXOMONDO REVEALS HOW THEY TURNED JEREMY IRONS INTO GOLD, AND REALIZED THE FUN HOUSE MIRROR EFFECTS.

In episode 109 of HBO’s Watchmen, the character Adrian Veidt aka Ozymandias (Jeremy Irons) goes through a carbonizing effect, rendering him as a golden statue.

Behind that work was Pixomondo, via its Stuttgart branch, which also delivered the shattering mirror effects in the fun house in episode 105. Here, Pixomondo team members break down, using behind the scenes imagery, how those sequences were achieved.

The Carbonizing Effect

Adam Figielski, visual effects supervisor, Pixomondo: Most of us know the process of the carbon-freezing effect from The Empire Strikes Back. But early on in the production it was clear: this time the procedure should be shown in close-up, not hiding behind a steam curtain.

Adam Figielski: The carbonite style effect had to spray onto a live action Adrian Veidt, and then match the look of the prop statue seen elsewhere in the series. The buildup had to be interesting and organic, but happen rapidly enough to fill the conceit that he is flash-frozen by it. Production VFX supervisor Erik Henry wanted the effect to be powerful like a liquid nitrogen release.

Adam Figielski: For reference and discussion purposes, we also captured different deodorant sprays, hair sprays and adhesive sprays in slow motion hitting different surfaces, causing the Stuttgart office smell like a drugstore for days.

Marc Joos, head of CGFX, Pixomondo: For the carbonizing effect we created an infection setup in Houdini that was crawling over the surface outwards from the position the spray would hit the character. Using it as a mask to reveal several ripple effects and noise patterns that eventually created the look of the freezing, additionally providing AOV’s for Comp to enhance the frosted over coating of the the surface.

Additionally we used the infection mask to create a secondary layer of particles emitted from the surface as a reaction of the spray acting on the body, advecting the particles with the turbulences generated by the underlaying pyro sim of the spray.

Funhouse Mirrors

Adam Figielski: The shot was tech-vised [by Pixomondo in LA] to determine how to shoot both the primary image of the actor and the reflections. Based on the tech-vis, production placed several cameras filming at the same time at appropriate angles so all of the reflections are synchronized versions of the actual actor’s performance. The greenscreen takes needed to seamlessly match on set footage, so we first recreated the set fully digital based on set scans and on set photography provided to us by Erik Henry.

One of the more specific challenges here was the timing. In order to hit the required beats and the preferred timing Erik was looking for, we had to make sure that we were able to orchestrate every unique glass shard. We had multiple reflections behind the actor shatter, revealing additional reflections behind him that shatter and break, and so on.

It was tricky to keep a clear and readable frame with all the action, motion blur, depth of field and wobbling camera. Too much glass dropping for instance made the effect looking like a waterfall. At the end, a fine balance between simulated glass falling down or shooting out, was arranged in Maya to refine the timing.

Also, the timing and layout of the mirror cracks was built to help tell the story of the psychic blast propagating as a wave through the scene.

Christoph Schmidt, head of 3D, Pixomondo: The first challenge was to matchmove those shots. It took us quite some time to figure out if the actor is actually directly in front of the camera or if it’s just his reflection. We had shots, were the camera was pointing directly onto a mirror, that reflected another mirror, that showed our actor in front of a lot more mirrors. Without a 3D scan of the mirror cabinet, that would have been completely impossible.

The next challenge was to recreate the actor’s reflections in all of those scattering mirrors. On set, the actor’s performance was filmed with 6 cameras surrounding the actor in 60 degree steps. Our approach was similar to the way games are created. We projected those 6 plates onto planes intersecting at a 60 degree angle meeting where the actor was standing, and used the planes normals to drive their visibility. This way, every reflection automatically showed the plate that is closest to what we actually would see in real life.

Also, rendering those reflections was challenging. We really had to bump up raydepth, and we were not able to use AOVs the way we’re used to. All utility passes, like a ZDepth, are not raytraced. They just sample the first geometry they hit. Doing motion blur and depth of field in comp was therefore impossible and needed to be rendered. Mattes where actually raytraced images of constant materials.

Support befores & afters on Patreon, and get bonus content in the process!