Andersson Technologies releases SynthEyes 1502 3D Tracking Software

Andersson Technologies has released SynthEyes 1502, the latest version of its 3D tracking software, improving compatibility with Blackmagic Design’s Fusion compositing software.

Reflecting the renewed interest in Fusion

According to the official announcement: “Blackmagic Design’s recent decision to make Fusion 7 free of charge has led to increased interest in that package. While SynthEyes has exported to Fusion for many years now — for projects such as Battlestar Galactica — Andersson Technologies LLC upgraded SynthEyes’s Fusion export.”

Accordingly, the legacy Fusion exporter now supports 3D planar trackers; primitive, imported, or tracker-built meshes; imported or extracted textures; multiple cameras; and lens distortion via image maps.

The new lens distortion feature should make it possible to reproduce the distortion patterns of any real-world lens without its properties having been coded explicitly in the software or a custom plugin.

A new second exporter creates corner pin nodes in Fusion from 2D or 3D planar trackers in SynthEyes.

Other new features in SynthEyes 1502 include an error curve mini-view, a DNG/CinemaDNG file reader, and a refresh of the user interface, including the option to turn toolbar icons on or off.

Pricing and availability

SynthEyes 1502 is available now for Windows, Linux and Mac OS X. New licences cost from $249 to $999, depending on which edition you buy. The new version is free to registered users.

New features in SynthEyes 1502 include:

- Toolbar icons are back! Some love ’em, some hate ’em. Have it your way: set the preference. Shows both text and icon by default to make it easiest, especially for new users with older tutorials. Some new and improved icons.

- Refresh of user interface color preferences to a somewhat darker and trendier look. Other minor appearance tweaks.

- New error curve mini-view.

- Updated Fusion 3D exporter now exports all cameras, 3D planars, all meshes (including imported), lens distortion via image maps, etc.

- New Fusion 2D corner pinning exporter.

- Lens distortion export via color maps, currently for Fusion (Nuke for testing).

- During offset tracking, a tracker can be (repeatedly) shift-dragged to different reference patterns on any frame, and SynthEyes will automatically adjust the offset channel keying.

- Rotopanel’s Import tracker to CP (control point) now asks whether you want to import the relative motion or absolute position.

- DNG/CinemaDNG reading. Marginal utility: DNG requires much proprietary postprocessing to get usable images, despite new luma and chroma blur settings in the image preprocessor.

- New script to “Reparent meshes to active host” (without moving them)

- New section in the user manual on “Realistic Compositing for 3-D”

- New tutorials on offset tracking and Fusion.

- Upgraded to RED 5.3 SDK (includes REDcolor4, DRAGONcolor2).

- Faster camera and perspective drawing with large meshes and lidar scan data.

- Windows: Installing license data no longer requires “right click/Start as Administrator”—the UAC dialog will appear instead.

- Windows: Automatically keeps the last 3 crash dumps. Even one crash is one too many.

- Windows: Installers, SynthEyes, and Synthia are now code-signed for “Andersson Technologies LLC” instead of showing “Unknown publisher”.

- Mac OS X: Yosemite required that we change to the latest XCode 6—this eliminated support for OS X 10.7. Apple made 10.8 more difficult as well.

About SynthEyes

SynthEyes is a program for 3-D camera-tracking, also known as match-moving. SynthEyes can look at the image sequence from your live-action shoot and determine how the real camera moved during the shoot, what the camera’s field of view (~focal length) was, and where various locations were in 3-D, so that you can create computer-generated imagery that exactly fits into the shot. SynthEyes is widely used in film, television, commercial, and music video post-production.

What can SynthEyes do for me? You can use SynthEyes to help insert animated creatures or vehicles; fix shaky shots; extend or fix a set; add virtual sets to green-screen shoots; replace signs or insert monitor images; produce 3D stereoscopic films; create architectural previews; reconstruct accidents; do product placements after the shoot; add 3D cybernetic implants, cosmetic effects, or injuries to actors; produce panoramic backdrops or clean plates; build textured 3-D meshes from images; add 3-D particle effects; or capture body motion to drive computer-generated characters. And those are just the more common uses; we’re sure you can think of more.

What are its features? Take a deep breath! SynthEyes offers 3-D tracking, set reconstruction, stabilization, and motion capture. It handles camera tracking, 2- and 3-D planar tracking, object tracking, object tracking from reference meshes, camera+object tracking, survey shots, multiple-shot tracking, tripod (nodal, 2.5-D) tracking, mixed tripod and translating shots, stereoscopic shots, nodal stereoscopic shots, zooming shots, lens distortion, light solving. It can handle shots of any resolution (Intro version limited to 1920×1080)—HD, film, IMAX, with 8-bit, 16-bit, or 32-bit float data, and can be used on shots with thousands of frames. A keyer simplifies and speeds tracking for green-screen shots. The image preprocessor helps remove grain, compression artifacts, off-centering, or varying lighting and improve low-contrast shots. Textures can be extracted for a mesh from the image sequence, producing higher resolution and lower noise than any individual image. A revolutionary Instructible Assistant, Synthia™, helps you work faster and better, from spoken or typed natural language directions.

SynthEyes offers complete control over the tracking process for challenging shots, including an efficient workflow for supervised trackers, combined automated/supervised tracking, offset tracking, incremental solving, rolling-shutter compensation, a hard and soft path locking system, distance constraints for low-perspective shots, and cross-camera constraints for stereo. A solver phase system lets you set up complex solving strategies with a visual node-based approach (not in Intro version). You can set up a coordinate system with tracker constraints, camera constraints, an automated ground-plane-finding tool, by aligning to a mesh, a line-based single-frame alignment system, manually, or with some cool phase techniques.

Eyes starting to glaze over at all the features? Don’t worry, there’s a big green AUTO button too. Download the free demo and see for yourself.

What can SynthEyes talk to? SynthEyes is a tracking app; you’ll use the other apps you already know to generate the pretty pictures. SynthEyes exports to about 25 different 2-D and 3-D programs. The Sizzle scripting language lets you customize the standard exports, or add your own imports, exports, or tools. You can customize toolbars, color scheme, keyboard mapping, and viewport configurations too. Advanced customers can use the SyPy Python API/SDK.

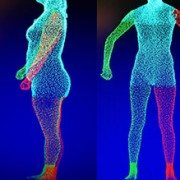

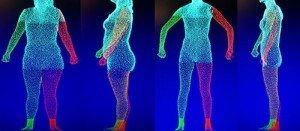

The tablet will apparently be able to scan an “advanced photogrammetric picture” with up to 4 million dots in around 2 minutes. It will also be able to capture 3D objects in motion. It’s using a blend of computer vision techniques, photogrammetry, visual odometer, “precision sensor fine tuning” and other image measuring techniques, say its makers.

The tablet will apparently be able to scan an “advanced photogrammetric picture” with up to 4 million dots in around 2 minutes. It will also be able to capture 3D objects in motion. It’s using a blend of computer vision techniques, photogrammetry, visual odometer, “precision sensor fine tuning” and other image measuring techniques, say its makers.